» What is Mean Stack?

- Mean Stack is an acronym for MongoDB, ExpressJS, Angular and NodeJs

- Its a user friendly JavaScript framework to create dynamic web apps.

- It uses single language i.e. JavaScript on frontend and backend which makes it efficient and modern approach to web development. So, even if developer only knows JavaScript can work on both frontend and backend.

» What are the key principles of scalable frontend architecture?

Key Principles of Scalable Frontend Architecture:

Scalable frontend architecture refers to the design and organization of frontend code that can easily accommodate growth and handle increasing complexity over time. Key principles of scalable frontend architecture include:

- Modularity: Break down the frontend codebase into smaller, reusable modules or components. Each module should have a single responsibility and be easily interchangeable.

- Component-Based Architecture: Adopt a component-based approach where UI elements are encapsulated into independent components. Components can be composed and reused throughout the application.

- Separation of Concerns: Separate presentation logic (UI), business logic, and data logic to ensure clear responsibilities and easier maintenance. For example, separate CSS files for styling, JavaScript files for behavior, and HTML files for structure.

- Scalability: Design the architecture to accommodate growth and handle increased traffic, data, and features without sacrificing performance or maintainability. Use techniques like lazy loading, code splitting, and caching to optimize performance.

- Performance Optimization: Optimize frontend performance by minimizing network requests, reducing page load times, and optimizing rendering efficiency. Use techniques like minification, compression, and asset optimization to improve performance.

- Flexibility and Extensibility: Design the architecture to be flexible and extensible, allowing for easy addition of new features, components, and integrations. Avoid tightly coupling modules and dependencies to facilitate future changes.

- Consistency and Standards: Establish coding standards, naming conventions, and architectural guidelines to ensure consistency and maintainability across the codebase. Document best practices and encourage adherence to standards.

» RDBMS vs NoSQL

- RDBMS stands for Relational Database Management System.

- NoSQL stands for Not only SQL.

- RDBMS is the structured way of storing data. i.e. fixed schema.

- NoSQL is an unstructured way to storing data. i.e. no fixed schema.

- RDBMS is vertically scalable.

- NoSQL is horizontally scalable.

- RDBMS databases are Oracle, MSSQL, MySQL, Postgress

- NoSQL databases are MongoDB, Cassandra, CouchDB, Redis, BigTable.

» What is mean by Horizontally Scaling of Database and Vertically Scaling of database?

Horizontally scaling means you scale by adding more machines into your pool of resources. Ex: MongoDB, Cassandra etc. Vertically scaling means you scale by adding more power (i.e. CPU, RAM) to an existing machine. Ex: MySQL, MSSQL etc.

» What is Node and how it works?

- Node is a open source and cross platform run time environment for executing JavaScript code outside of a browser.

- So basically node server executes the JavaScript code not the browser.

- Node run time uses V8 engine and C++ program to execute JavaScript.

- How Node Works:

- Node applications are asynchronous by default.

- In node we have a single thread to handle all the requests. So when the requests arrives, that single thread is used to handle all those.

Example: "If we send a request to database, our thread doesn't have to wait for the database to return the data. While database is executing query same thread will be used to serve another request. When the database returns the result it puts a message in the Event Queue. Node continuously monitors this event queue in background and when it finds event in the queue it takes it process it".

» Is nodejs a programming language?

No, Node.js is not a programming language. Node.js is a runtime environment for executing JavaScript code on the server-side, and JavaScript is the programming language that is used to write code for Node.js.

JavaScript is a high-level programming language that is used to create dynamic web pages and web applications. It is primarily used in client-side development, running in web browsers to manipulate web page content and behavior. However, with Node.js, JavaScript can also be used for server-side development, allowing developers to write full-stack web applications using a single programming language.

Node.js provides a set of built-in modules that make it easier to work with files, network sockets, and other resources on the server-side. It also includes a package manager called npm (Node Package Manager) that makes it easy to install and manage third-party libraries and modules.

So, while Node.js is not a programming language itself, it is a powerful and flexible runtime environment that allows developers to use JavaScript on the server-side to create scalable and efficient web applications.

» What is server-side runtime environment?

A server-side runtime environment is a software environmt in which server-side applications run. This environment includes a set of libraries, frameworks, and tools that provide a runtime for executing server-side code. The server-side runtime environment is responsible for handling requests from clients and returning responses to clients.Examples of server-side runtime environments include:

Node.js: Node.js is a server-side runtime environment built on the V8 JavaScript engine. It provides an event-driven, non-blocking I/O model that makes it efficient for building scalable network applications.

Ruby on Rails: Ruby on Rails is a web application framework written in the Ruby programming language. It provides a runtime environment for server-side applications and includes features such as a routing system, database access, and templating.

Java EE: Java EE is a platform for building enterprise-level applications in Java. It provides a runtime environment for server-side applications and includes features such as servlets, JavaServer Pages (JSP), and Enterprise JavaBeans (EJB).

These server-side runtime environments provide a powerful and flexible platform for building scalable and efficient server-side applications.

» What is V8 JavaScript engine?

The V8 JavaScript engine is an open-source JavaScript engine developed by Google. It is written in C++ and is used to execute JavaScript code in the Google Chrome browser, as well as in other applications and tools. The V8 engine is designed to be fast and efficient, and it uses various techniques to optimize the performance of JavaScript code. For example, it compiles JavaScript code into machine code rather than interpreting it, which makes it faster to execute. It also uses just-in-time (JIT) compilation to dynamically optimize frequently executed code.

The V8 engine provides a number of features that make it a popular choice for developers, including:

High performance: The V8 engine is designed to execute JavaScript code quickly, making it a good choice for applications that require high performance.

Memory management: The V8 engine uses a garbage collector to manage memory, which reduces the risk of memory leaks and other memory-related issues.

Cross-platform support: The V8 engine can be used on a variety of platforms, including Windows, Mac, Linux, and Android.

Easy integration: The V8 engine is designed to be easy to integrate into other applications and tools, making it a popular choice for building custom JavaScript runtimes.

In addition to being used in the Google Chrome browser, the V8 engine is also used in other applications and tools, including the Node.js runtime, the Electron framework for building desktop applications, and the Deno runtime for building server-side applications in JavaScript and TypeScript.

» Is nodejs multithreaded?

Node.js is technically not multithreaded in the traditional sense, as it uses a single thread to execute JavaScript code. However, Node.js does support asynchronous programming techniques, such as callbacks, promises, and async/await, that allow developers to write code that can handle multiple I/O operations concurrently without blocking the execution of other tasks.

Node.js achieves this concurrency through its event-driven architecture and the use of the event loop. When an I/O operation is initiated, Node.js registers a callback function to handle the operation's completion. While the I/O operation is in progress, Node.js can continue to execute other code, and when the operation completes, the registered callback function is added to the event loop for execution.

This means that while Node.js is not truly multithreaded, it can still handle multiple I/O operations concurrently and provide scalable performance for server-side applications. In addition, Node.js can make use of multiple threads for certain operations, such as file I/O, by using worker threads or child processes to execute code in separate threads.

Node.js provides a cluster module that allows you to create child processes that can share the same port, allowing you to take advantage of multiple CPU cores for handling incoming requests. Each child process runs on a separate thread, allowing you to handle multiple requests concurrently.

const cluster = require('cluster'); const http = require('http'); const numCPUs = require('os').cpus().length; if (cluster.isMaster) { console.log(`Master ${process.pid} is running`); // Fork workers for (let i = 0; i < numCPUs; i++) { cluster.fork(); } cluster.on('exit', (worker, code, signal) => { console.log(`worker ${worker.process.pid} died`); }); } else { // Worker processes have access to the shared server http.createServer((req, res) => { res.writeHead(200); res.end('hello world\n'); }).listen(8000); console.log(`Worker ${process.pid} started`); }

In this example, the cluster.isMaster check is used to determine whether the current process is the master process or a worker process. If it is the master process, it forks the desired number of worker processes and listens for exit events.

Each worker process then creates an HTTP server and listens on port 8000 for incoming requests. Because each worker process runs on a separate thread, they can handle incoming requests concurrently, allowing for better performance and scalability.

Note that while the cluster module allows you to take advantage of multiple CPU cores, it does not provide true multithreading. Each worker process still uses a single thread to execute JavaScript code, but they can handle multiple requests concurrently using asynchronous I/O operations.

Worker Threads: Another way to take advantage of multiple threads in Node.js is to use the worker_threads module, which allows you to create true multithreaded applications in Node.js.

The worker_threads module provides a way to create separate Node.js threads that can communicate with each other using message passing. Each thread has its own event loop and can execute JavaScript code independently of the main thread.

Here's an example of using the worker_threads module to create a multithreaded application that calculates the sum of an array of numbers:

const { Worker, isMainThread, parentPort, workerData } = require('worker_threads'); if (isMainThread) { // This is the main thread const numCPUs = require('os').cpus().length; const nums = Array.from({ length: 10000000 }, (_, i) => i + 1); const chunkSize = Math.ceil(nums.length / numCPUs); const workers = []; for (let i = 0; i < numCPUs; i++) { const worker = new Worker(__filename, { workerData: { start: i * chunkSize, end: (i + 1) * chunkSize, nums: nums.slice(i * chunkSize, (i + 1) * chunkSize) } }); workers.push(worker); worker.on('message', (msg) => { console.log(`Worker ${worker.threadId}: Sum is ${msg}`); }); worker.on('error', (err) => { console.error(`Worker ${worker.threadId} error: ${err}`); }); worker.on('exit', (code) => { if (code !== 0) { console.error(`Worker ${worker.threadId} exited with code ${code}`); } }); } for (let worker of workers) { worker.postMessage('calculate'); } } else { // This is a worker thread const sum = workerData.nums.reduce((acc, n) => acc + n, 0); parentPort.postMessage(sum); }

In this example, the main thread creates an array of numbers and divides it into chunks based on the number of available CPU cores. It then creates a new worker thread for each CPU core and passes a slice of the array to each worker as workerData.

Each worker then calculates the sum of its slice of the array and sends the result back to the main thread using parentPort.postMessage(). The main thread listens for message events from each worker and logs the final result.

This approach allows you to take full advantage of multiple CPU cores in Node.js and can significantly improve the performance of CPU-bound tasks. However, it's important to note that creating too many worker threads can actually degrade performance, so it's important to strike a balance between using multiple threads and avoiding excessive overhead.

» What is a module in NodeJS?

A module is a reusable block of code that encapsulates related functionality and can be loaded into other files using the require() function in NodeJS. Modules help to keep the code organized, modular, and easy to maintain.

» How to create a module in NodeJS?

To create a module in NodeJS, you need to export a function, object, or a variable from a file using the module.exports object. Here is an example:

// greet.js module.exports = { greet: function(name) { console.log(`Hello, ${name}!`); } };

You can then load this module into another file using the require() function:

// app.js const greet = require('./greet'); greet('John'); // Output: Hello, John!

» Callback function in Node JS:

Callback is a function which gets called when the result of an asynchronous operation is ready.

Example:

// calling a getUser function getUser(101, function(user){ console.log('user id', user.id); console.log('user name', user.username); }); function getUser(userId, callback){ setTimeout(function(){ callback({id:userId, username: "KJ"}); // callback function },2000); }

» What is Callback Hell in Node JS:

- Callback hell means nested callback functions

- Callback hell a.k.a. Pyramid doom

Example:

getUser(param1, function(err, paramx){ getUserDepartment(paramx, function(err, result){ insertSomeData(result, function(err){ doSomeAnotherOperation(someparameter, function(s){ againDosomethingElse(function(x){ }); }); }); }); });

» What is difference between callbacks and promises:

- Callbacks are simply functions in JavaScript which are to be called and then executed after the operation of an asynchronous function has finished.

- In JavaScript functions itself considered as an Objects, so we all know that we can pass objects to another function as an argument, similarly we can pass functions as an argument to another functions.

- setTimeout() is an example.

- Promises are nothing but a much more improvised approach of handling asynchronous code as compare to callbacks.

- Promises received two callbacks in constructor function: resolve and reject.

- The resolve callback is used when the execution of promise performed successfully and the reject callback is used to handle the error cases.

- The result of the computation might be that either the promise is fulfilled with value or rejected with a reason.

» What is difference between Promises and Observables

- Promises:

- Promises handles single event when asyc operation completes or fails.

- If promises gets resolved then it returns a single value.

- If promises gets rejected then it return an error.

- Promises are not cancellable, means, if we initiate any promise request we cannot cancel it.

- In Promises if request gets failed then we cannot retry it.

- Promises are part of JavaScript.

- Observable:

- Observable is an array or sequence of event over time.

- Observable works on streams of data.

- Obsevable allows us to pass zero or more events where the callback is called for each event.

- Unlike Promises obsevable are cancellable. We can do it by cancelling subscription.

- If the request gets failed then we can retry it using retry() method.

» What is Mongoose in Node JS.

- Mongoose in a JavaScript framework commonly used in Node.js applications.

- It works a an ODM (Object Data Modeler) for Node applications.

- Using mongoose we can easily implement validations and query API so that we can interact with MongoDB database.

- It allows you to define objects with a strongly typed schema that is mapped to a MongoDB document.

- Mongoose works with below schema types:

- Number, String, Date, Buffer, Boolean, Mixed, Array, Object.

// Install mongoose npm install mongoose --save // Add mongoose package in your node.js file var mongoose = require('mongoose'); // Connect with mongoose mongoose.connect('mongodb://localhost/dbname');

» Some important NodeJS packages for your application? (WIP)

const config = require('config'); // Manage the npm configuration files const path = require('path'); // Path module provides utilities for working with file and directory paths const logger = require('morgan'); // HTTP request logging moddleware. generates request logs const cookieParser = require('cookie-parser'); // Extract cookies and puts cookie info in req obj const bodyParser = require('body-parser'); // Extract body portion of request stream & exposes it on req.body const bluebird = require('bluebird'); // Javascript promise library const mongoose = require('mongoose'); // Object data modelling library for nodejs and mongodb

» What is middleware in ExpressJS?

Middleware is a function that sits between the client and the server and can intercept and modify the incoming request or the outgoing response. Middleware functions can be used for logging, authentication, error handling, and more.

» How to use middleware in ExpressJS?

const express = require('express'); const app = express(); // Middleware function const logger = (req, res, next) => { console.log(`${req.method} ${req.url}`); next(); // Call the next middleware function }; app.use(logger); // Use the middleware function app.get('/', (req, res) => { res.send('Hello, World!'); }); app.listen(3000, () => { console.log('Server running at http://localhost:3000/'); });

» How to use middleware in ExpressJS?

To use middleware in ExpressJS, you can use the app.use method. Here is an example:

const express = require('express'); const app = express(); app.use((req, res, next) => { console.log(`Received request for ${req.url}`); next(); }); app.use(express.static('public')); app.listen(3000, () => { console.log('Server started on port 3000'); });

This code defines two middleware functions: one that logs the URL of each request, and one that serves static files from the public directory. The app.use method is used to add these middleware functions to the Express application.

» How to handle form data in ExpressJS?

To handle form data in ExpressJS, you can use the body-parser middleware. Here is an example:

const express = require('express'); const bodyParser = require('body-parser'); const app = express(); // Use body-parser middleware app.use(bodyParser.urlencoded({ extended: false })); app.get('/', (req, res) => { res.send(` <form method="post" action="/submit"> <input type="text" name="name" placeholder="Name"> <button type="submit">Submit</button> </form> `); }); app.post('/submit', (req, res) => { const name = req.body.name; res.send(`Hello, ${name}!`); }); app.listen(3000, () => { console.log('Server running at http://localhost:3000/'); });

» How to use streams in NodeJS?

Streams are a powerful tool in NodeJS that allow you to process data piece by piece, rather than loading the entire data into memory at once. There are four types of streams in NodeJS: Readable, Writable, Duplex, and Transform. Here is an example of using a Readable stream to read a file:

const fs = require('fs'); const readStream = fs.createReadStream('myfile.txt'); readStream.on('data', (chunk) => { console.log(`Received ${chunk.length} bytes of data.`); }); readStream.on('end', () => { console.log('Finished reading file.'); });

» Explain HTTP status codes

HTTP status codes indicate whether a request has been successfully completed. They are grouped into five ranges, each of which defines where the error occurred and the number of which defines the error itself:

1XX: Informational codes, which indicate that the server is acknowledging and processing the request

2XX: Success codes, which indicate that the server has successfully received, understood, and processed the request

3XX: Redirection codes, which indicate that the server has received the request but there is a redirect to somewhere else

4XX: Client error codes, which indicate that the server could not find or reach the page or website

5XX: Server error codes, which indicate that the client made a valid request but the server was unable to complete it

» What are the HTTP codes to describe an attempt to access retricted resources

401 Unauthorized: The request requires user authentication. This status is sent when authentication is possible but has failed or has not yet been provided.

403 Forbidden: The server understands the request but refuses to authorize it. This is used when the server knows the identity of the client but does not have permission to access the resource.

» What is Angular framework? How Angular works?

Angular is an open-source front-end web application framework that is maintained by Google. It is designed to simplify the process of building dynamic, single-page web applications (SPAs) and mobile applications.

Angular works by allowing developers to use declarative templates to create HTML pages that can be extended with dynamic content and functionality. This is achieved through a combination of features, including:

Components: Angular applications are built using components, which are reusable code modules that define the behavior and appearance of a part of a web page.

Directives: Directives are attributes that can be added to HTML elements to provide additional functionality, such as data binding or event handling.

Services: Services are a way to share data and functionality across multiple components in an application.

Dependency Injection: Angular uses dependency injection to manage dependencies between different components and services.

Routing: Angular provides a powerful routing system that allows developers to create complex, single-page applications with multiple views.

Angular is built using TypeScript, a superset of JavaScript that provides additional features such as type checking and classes. TypeScript allows developers to write cleaner and more maintainable code, and provides additional tools for debugging and development.

Overall, Angular provides developers with a powerful set of tools for building modern web applications that are fast, responsive, and easy to maintain.

» How angular works internally?

Angular works by using a combination of features and tools that work together to create a dynamic, responsive web application. Here are some of the key components of Angular's internal workings:

The Angular Compiler: Angular uses a compiler to translate templates and component metadata into executable code that can be run in the browser. This allows Angular to optimize performance and minimize the amount of work that needs to be done at runtime.

Change Detection: Angular uses a technique called change detection to track changes in the application's state and update the UI accordingly. When a change is detected, Angular updates the affected parts of the UI automatically, without the need for manual intervention.

Dependency Injection: Angular uses dependency injection to manage dependencies between components and services. This allows components and services to be easily replaced or modified without affecting the rest of the application.

RxJS: Angular makes extensive use of the Reactive Extensions for JavaScript (RxJS) library to handle asynchronous data streams and events. RxJS provides a powerful and flexible way to manage complex data flows in Angular applications.

Angular Modules: Angular applications are organized into modules, which are collections of related components, services, and directives. Modules allow developers to compartmentalize their code and make it easier to manage and maintain.

Angular CLI: The Angular Command Line Interface (CLI) is a tool that provides developers with a streamlined way to create, test, and deploy Angular applications. The CLI automates many common development tasks and provides a consistent development experience across different projects.

Overall, Angular's internal workings are designed to provide developers with a powerful and flexible set of tools for building modern web applications that are fast, responsive, and easy to maintain.

» How to improve performance of Angular application?

Angular is a great framework but its large as well, and not making it optimized end up performance issues. So there are multiple ways to improve performance of our angular app. Example:

- AOT Compilation for production builds

- Lazy loading of modules

- Minification

- OnPush change detection if the application is big

- Use of module bundlers

- To avoid function call from templates

- Pure Pipes

- Unsubscribe observable

Read below article for more details:

https://angular-guru.com/blog/angular-performance-tips

https://github.com/mgechev/angular-performance-checklist

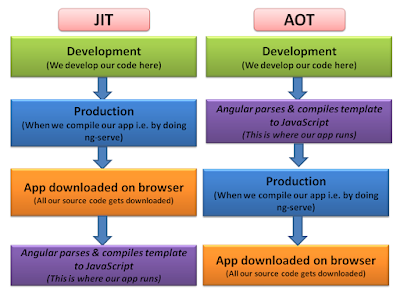

» Angular Compilation : AOT vs JIT

Angular offers two types of code compilation i.e. AOT and JIT.

What is mean by code compilation in angular: Well it DOES NOT MEAN COMPILING TYPESCRIPT INTO JAVASCRIPT because that is job of CLI. Angular also needs to compile our templates i.e. html dom, so it first parses these html files and compiles it into JavaScript because accessing JavaScript is faster than accessing HTML DOM.

JIT:

- JIT stands for Just in Time compilation

- It compiles our code i.e. HTML and TypeScript on browser at runtime hence performance wise its slow.

- For JIT you don't need to build after changing your code or before reloading the browser page which is suitable for local env while development.

Commands :

ng build, ng serve

AOT:

- AOT stands for Ahead of Time compilation.

- It compiles at the build time i.e. machine itself does the compilation process via command line.

- It is suitable mainly for production build as you don't need to deploy compiler.

- It is secure, original source code node disclosed.

- Detect template errors earlier.

- Commands :

ng build --aot, ng serve --aot, ng build --prod

» Angular lifecycle hooks?

Angular components goes through different phases from being created and destroyed. These phases are called as Angular Lifecycle Hooks. Following are the orders in which different phases happen.

- ngOnChanges() - calls when Angular sets or re-sets the data-bound properties. Called before ngOnInit() and whenever one or more data-bound input properties change.

- ngOnInit() - called when Angular initializes the Component. It is called only once.

- ngDoCheck() - Detect and act upon changes that Angular can't or won't detect on its own. It is called immediately after ngOnChanges() and ngOnInit().

- ngAfterContentInit() - Respond after Angular projects external content into the component's view. It is a Component only hook and can not be called for a directive.

- ngAfterContentChecked() - Respond after Angular checks the content projected into the component. Called after the ngAfterContentInit(). It will also be called every subsequent ngDoCheck(). This is a Component-only hook.

- ngAfterViewInit() - Respond after Angular initializes the component's views and child views. This is a Component-only hook.

- ngAfterViewChecked() - Respond after Angular checks the component's views and child views. A component-only hook.

- ngOnDestroy() - Called just before Angular destroys the directive/component. This is the place we can unsubscribe observables and detach event handlers to avoid memory leaks.

» Why constructor is required if onInit() is there in Angular?

Constructor:

The constructor is a standard TypeScript feature used for initializing class instances and setting up dependencies. It's executed when an instance of the class is created, typically by Angular's dependency injection system. In Angular components, the constructor is primarily used for injecting dependencies via constructor parameters, such as services or other components. It's also where you can perform basic initialization tasks that don't depend on Angular's lifecycle hooks, such as initializing properties or configuring class variables.

Example:

Consider a user management component in an Angular application. In the constructor, you might inject a UserService dependency to fetch user data from the server. Additionally, you might initialize component properties or configure default settings.

import { Component } from '@angular/core'; import { UserService } from './user.service'; @Component({ selector: 'app-user-management', templateUrl: './user-management.component.html', styleUrls: ['./user-management.component.css'] }) export class UserManagementComponent { users: User[]; constructor(private userService: UserService) { // Initialize properties or perform basic setup this.users = []; } // Other component methods and lifecycle hooks... }

ngOnInit():

The ngOnInit() method is an Angular lifecycle hook that is called after Angular has initialized all data-bound properties of a directive or component. It's typically used to perform initialization tasks specific to Angular components, such as fetching initial data from services, subscribing to observables, or initializing component state. Unlike the constructor, ngOnInit() is Angular-specific and is called as part of Angular's component lifecycle, making it suitable for tasks that require access to Angular-specific features or dependencies.

Example:

Continuing with the user management example, in the ngOnInit() method, you might fetch initial user data from the UserService and initialize the users array. This ensures that the component is fully initialized before performing any data-fetching operations.

import { Component, OnInit } from '@angular/core'; import { UserService } from './user.service'; @Component({ selector: 'app-user-management', templateUrl: './user-management.component.html', styleUrls: ['./user-management.component.css'] }) export class UserManagementComponent implements OnInit { users: User[]; constructor(private userService: UserService) {} ngOnInit(): void { // Fetch initial user data from the UserService this.userService.getUsers().subscribe((users) => { this.users = users; }); } // Other component methods... }

If you perform data-fetching tasks, such as calling the getUsers() method, directly in the constructor instead of the ngOnInit() method in an Angular component, it may lead to unexpected behavior and potential issues.

Here's what would happen:

- Early Execution: When you fetch data in the constructor, it gets executed as soon as the component is instantiated.

This means that the data-fetching operation will occur before Angular has finished initializing the component and its data-bound properties.

- Potential Race Conditions: If the data-fetching operation involves asynchronous tasks, such as HTTP requests, there's a risk of race conditions.

Since the constructor does not wait for asynchronous operations to complete, the component's properties may not be fully initialized when the data arrives.

This can lead to undefined or unexpected behavior when accessing component properties bound to the fetched data.

- Violation of Angular Lifecycle: Angular's lifecycle hooks, such as ngOnInit(), exist to provide a standardized way to perform initialization tasks after Angular has initialized component properties.

By performing data-fetching tasks in the constructor, you're bypassing Angular's lifecycle and violating best practices for component initialization.

- Potential Performance Issues: Fetching data in the constructor may lead to unnecessary overhead and performance issues, especially if the data-fetching operation is expensive or resource-intensive.

This can impact the initial rendering performance of the component and may cause delays in displaying the component's content.

In summary, while it's technically possible to fetch data in the constructor, it's generally not recommended in Angular applications. Instead, using the ngOnInit() lifecycle hook ensures that data-fetching tasks occur at the appropriate stage of the component lifecycle, avoiding potential issues related to race conditions, Angular lifecycle violations, and performance overhead.

» What are the difference between Renderer and ElementRef in Angular 4?

The Renderer in Angular is a class that is an abstraction over the DOM. Using the Renderer for manipulating the DOM doesn't break server-side rendering or Web Workers (where direct access to the DOM would break).

For example, if we want to focus an input element, we could use template reference variable and with the help of @ViewChild decorator we can access the native DOM. But we are actually depending the native DOM and lose the opportunity to be able to run our script also in no-DOM environments like native mobile or Web workers. Remember that Angular is a platform, and the browser is just one option for where we can render our app.

import { Directive, Renderer, ElementRef } from '@angular/core'; @Directive({ selector: '[exploreRenderer]' }) export class ExploreRendererDirective { private nativeElement : Node; constructor( private renderer : Renderer, private element : ElementRef ) { this.nativeElement = element.nativeElement; } } //Where ever we want to use the native DOM, we could use this directive on the element like <div exploreRenderer></div>

ElementRef is a class that can hold a reference to a DOM element. This is again an abstraction to not break the environments where the browsers DOM isn't actually available. If ElementRef is injected to a component, the injected instance is a reference to the host element of the current component.

There are other ways to acquire an ElementRef instance like @ViewChild(), @ViewChildren(), @ContentChild(), @ContentChildren(). In this case ElementRef is a reference to the matching element(s) in the template or children.

Renderer and ElementRef are not "either this or that", but instead they have to be used together to get full platform abstraction.

Renderer acts on the DOM and ElementRef is a reference to an element in the DOM the Renderer acts on.

» What is the difference between @Inject and @Injectable?

@Inject:

The @Inject decorator is used to specify a dependency token when injecting a service or a value into a component, directive, or another service.

It allows you to manually specify the provider token to be used for dependency injection when there are multiple providers available for the same type.

You typically use @Inject when you have multiple tokens providing the same type, and you want to specify which one to use.

It's commonly used in conjunction with Angular's dependency injection system to resolve dependencies when there are token conflicts or when you want to inject a value from a different provider.

import { Injectable, Inject } from '@angular/core'; import { TOKEN_NAME } from './constants'; @Injectable() export class MyService { constructor(@Inject(TOKEN_NAME) private myToken: any) { } }

@Injectable:

The @Injectable decorator is used to define a class as a service that can be injected into other components, directives, or services.

It marks a class as eligible for dependency injection by Angular's injector.

When a class is decorated with @Injectable, Angular's dependency injection system can create instances of that class and inject them where needed.

It's commonly used to define services, which are singletons in Angular and can provide functionality that can be shared across multiple components.

import { Injectable } from '@angular/core'; @Injectable() export class MyService { constructor() { } // Service methods go here }

In summary, @Inject is used to specify a dependency token for a specific injection point, while @Injectable is used to mark a class as a service that can be injected into other parts of your Angular application.

» What is Change Detection in Angular? How it is improved from Angular 1.x?

Change detection is the process that allows Angular to keep our views in sync with our models. In Angular 1, we have two-way data bindings and have a long list of watchers that needed to be checked every-time a digest cycle was started. This was called dirty checking and it was the only change detection mechanism available.

In Angular, the flow of information is unidirectional, even when using ngModel to implement two way data binding. In this new version, our code is responsible for updating the models. Angular is only responsible for reflecting those changes in the components and the DOM by means of the selected change detection strategy.

By default, Angular defines a certain change detection strategy for every component in our application. To make this definition explicit, we can use the property changeDetection of the @Component decorator. Angular provides two Change Detection Strategies:

ChangeDetectionStrategy.Default - The default strategy for the change detection is to traverse all the components of the tree even if they do not seem to have been modified.

ChangeDetectionStrategy.OnPush - This instructs Angular to run change detection on these components and their sub-tree only when new references are passed to them versus.

Below is the example code for default change detection:

//app.component.ts import { Component } from '@angular/core'; @Component({ selector: 'app-root', templateUrl: './app.component.html' }) export class AppComponent { fruits = ['Banana', 'Apple', 'Orange']; addFruit(fruit) { this.fruits.push(fruit); } }

<input #newFruit type="text" placeholder="Enter a fruit"> <button (click)="addFruit(newFruit.value)">Add fruit</button> <app-child [items]="fruits"></app-child>

Child component

import { Component, Input } from '@angular/core'; @Component({ selector: 'app-child', templateUrl: './child.component.html' }) export class ChildComponent { @Input() items: string[]; }

Child component's template:

<ul> <li *ngFor="let item of items">{{ item }}</li> </ul>

Angular creates change detector classes at runtime for each component, and keep track of the previous values to check with the new value whenever a change is detected. If we add a fruit in the parent component it will get reflected in the child immediately. The OnPush strategy is different that it will only run the change detection when the component's state is mutated. The same child component with this strategy is as follow:

import { Component, Input, ChangeDetectionStrategy } from '@angular/core'; @Component({ selector: 'app-child', templateUrl: './child.component.html', changeDetection: ChangeDetectionStrategy.OnPush }) export class ChildComponent { @Input() items: string[]; }

Now if we add a fruit in the parent component, it will not be updated in the child. Because the child component is receiving the same array reference every time. In order to make this work, we need to pass the new reference of fruits array every time we change it.

//app.component.ts addFruit(fruit) { this.foods = [...this.fruits, fruit]; }

Now everything is working fine because Angular able to identify new reference and can run the change detection. OnPush change detection is very useful when our application has lot of components as it will boost the application performance.

» What is Interpolation?

Interpolation is a special syntax in Angular which binds the properties we defined in our component into the template. It is represented by double curly braces {{}}. Angular converts property name into string value of the corresponding component property.

Example:

<div> {{name}} <img src="{{imageUrl}}" alt="{{imageTitle}}> </div>

» What are the Decorators in Angular?

Decorators are the new features of TypeScript and they are used throughout in Angular (2+). Decorators are nothing but a functions and as per the documentation in TypeScript decorators are special kind of declarations that can be attached to class or method.

There are 5 types of decorators:

- Class Decorator

- Method Decorator

- Property Decorator

- Accessor Decorator

- Parameter Decorator

In Angular, a decorator is a design pattern that allows you to enhance or modify the behavior of classes, methods, or properties at runtime without changing their source code. Decorators are typically functions that take one or more arguments and return a function, which is then applied to the target object. In Angular, decorators are often used with classes, components, directives, services, and dependency injection.

Imagine you're building an e-commerce website with Angular, and you have a component called ProductComponent that displays product details. You want to enhance the functionality of this component by adding logging capabilities whenever a user interacts with it.

import { Component } from '@angular/core'; @Component({ selector: 'app-product', templateUrl: './product.component.html', styleUrls: ['./product.component.css'] }) export class ProductComponent { productName: string = 'iPhone 13'; productPrice: number = 999; constructor() { } addToCart(): void { console.log(`Product added to cart: ${this.productName}`); // Add logic to add product to cart } buyNow(): void { console.log(`Product purchased: ${this.productName}`); // Add logic to complete purchase } }

Now, let's say you want to log a message every time the addToCart() and buyNow() methods are called. Instead of adding logging code directly into these methods, you can use decorators to dynamically enhance their behavior.

import { Component } from '@angular/core'; // Decorator function to log method calls function logMethod(target: any, propertyKey: string, descriptor: PropertyDescriptor): void { const originalMethod = descriptor.value; descriptor.value = function (...args: any[]) { console.log(`Method called: ${propertyKey}`); const result = originalMethod.apply(this, args); return result; }; } @Component({ selector: 'app-product', templateUrl: './product.component.html', styleUrls: ['./product.component.css'] }) export class ProductComponent { productName: string = 'iPhone 13'; productPrice: number = 999; constructor() { } @logMethod addToCart(): void { // Add logic to add product to cart } @logMethod buyNow(): void { // Add logic to complete purchase } }

In this example:

We define a decorator function called logMethod() that takes the target object, property key, and property descriptor as arguments.

Inside the decorator function, we replace the original method with a new function that logs a message before calling the original method.

We apply the logMethod decorator to the addToCart() and buyNow() methods using the @ syntax.

Now, whenever the addToCart() or buyNow() methods are called, a message will be logged to the console indicating that the method was called. This demonstrates how decorators can be used to enhance or modify the behavior of methods in Angular components.

» Lets see example of all decorators

### Class Decorator:

A class decorator is applied to a class declaration and can be used to modify or enhance the behavior of the class.

Example:

function logClass(target: any) { console.log('Class decorator invoked:', target); } @logClass class MyClass { constructor() { console.log('MyClass instantiated'); } }

In this example, the `logClass` function acts as a class decorator. It is applied to the `MyClass` declaration using the `@` syntax. When `MyClass` is instantiated, the class decorator is invoked, and the target parameter receives a reference to the constructor function of the class.

### Method Decorator:

A method decorator is applied to a method within a class and can be used to modify or enhance the behavior of that method.

Example:

function logMethod(target: any, propertyKey: string, descriptor: PropertyDescriptor) { console.log('Method decorator invoked for method:', propertyKey); } class MyClass { @logMethod myMethod() { console.log('Executing myMethod'); } }

In this example, the `logMethod` function acts as a method decorator. It is applied to the `myMethod` method using the `@` syntax. When `myMethod` is called, the method decorator is invoked, and the target, propertyKey, and descriptor parameters receive information about the method being decorated.

### Property Decorator:

A property decorator is applied to a property within a class and can be used to modify or enhance the behavior of that property.

Example:

function logProperty(target: any, propertyKey: string) { console.log('Property decorator invoked for property:', propertyKey); } class MyClass { @logProperty myProperty: string = 'Hello'; }

In this example, the `logProperty` function acts as a property decorator. It is applied to the `myProperty` property using the `@` syntax. When the class is instantiated, the property decorator is invoked, and the target and propertyKey parameters receive information about the property being decorated.

### Accessor Decorator:

An accessor decorator is applied to a getter or setter within a class and can be used to modify or enhance the behavior of that accessor.

Example:

function logAccessor(target: any, propertyKey: string, descriptor: PropertyDescriptor) { console.log('Accessor decorator invoked for accessor:', propertyKey); } class MyClass { private _myProperty: string = 'Hello'; @logAccessor get myProperty(): string { return this._myProperty; } @logAccessor set myProperty(value: string) { this._myProperty = value; } }

In this example, the `logAccessor` function acts as an accessor decorator. It is applied to both the getter and setter of the `myProperty` property using the `@` syntax. When the getter or setter is called, the accessor decorator is invoked, and the target, propertyKey, and descriptor parameters receive information about the accessor being decorated.

### Parameter Decorator:

A parameter decorator is applied to a parameter declaration within a method or constructor and can be used to modify or enhance the behavior of that parameter.

Example:

function logParameter(target: any, propertyKey: string, parameterIndex: number) { console.log('Parameter decorator invoked for parameter:', parameterIndex); } class MyClass { myMethod(@logParameter param1: string, @logParameter param2: number) { console.log('Executing myMethod with parameters:', param1, param2); } }

In this example, the `logParameter` function acts as a parameter decorator. It is applied to the parameters of the `myMethod` method using the `@` syntax. When `myMethod` is called, the parameter decorator is invoked for each parameter declaration, and the target, propertyKey, and parameterIndex parameters receive information about the parameter being decorated.

These are the various types of decorators available in TypeScript, each serving a different purpose and allowing for flexible and powerful meta-programming capabilities.

» Data Bindings in Angular

There are two types of data binding angular supports. 1) One Way Data Binding & 2) Two Way Data Binding

One Way Data Binding:

From Component to Template (i.e. Source to View)

{{expression}} // interpolation [target]="expression" // property binding bind-target="expression" // attribute

From Template to Component (i.e. View to Source) via event binding

(target)="statement" on-target="statement"

Two Way Data Binding:

From Component to Template and Template to Component (both way)

[(target)]="expression" bindon-target="expression"

» Filters in Angular: Explanation and Examples

1. Basic Usage: Filters in Angular are used to format and manipulate data displayed in templates. They allow you to transform data before it is rendered to the user. Here's a basic example:

<!-- Template --> <p>{{ dateValue | date }}</p> <!-- Controller --> $scope.dateValue = new Date();

This example uses the built-in Angular filter date to format the dateValue as a human-readable date string.

2. Custom Filters: You can also create custom filters to perform specific transformations on data. For example, let's create a filter to capitalize the first letter of a string:

// Custom Filter app.filter('capitalize', function() { return function(input) { if (!input) return ''; return input.charAt(0).toUpperCase() + input.slice(1); }; }); <!-- Template --> <p>{{ textValue | capitalize }}</p> <!-- Controller --> $scope.textValue = 'hello world';

This custom capitalize filter takes a string input and returns the same string with the first letter capitalized.

3. Chaining Filters: Filters can be chained together to perform multiple transformations on data. For example, let's first capitalize a string and then convert it to uppercase:

<!-- Template --> <p>{{ textValue | capitalize | uppercase }}</p> <!-- Controller --> $scope.textValue = 'hello world';

This example first capitalizes the textValue string using the capitalize filter and then converts it to uppercase using the uppercase filter.

4. Filtering Arrays: Filters can also be applied to arrays to filter or sort data based on specific criteria. For example, let's filter an array of objects based on a property value:

<!-- Template --> <ul> <li ng-repeat="item in items | filter: { category: 'fruit' }">{{ item.name }}</li> </ul> <!-- Controller --> $scope.items = [ { name: 'Apple', category: 'fruit' }, { name: 'Carrot', category: 'vegetable' }, { name: 'Banana', category: 'fruit' } ];

In this example, the ng-repeat directive is used to iterate over the items array and display only those items whose category property is 'fruit'.

Overall, filters in Angular provide a powerful way to format, transform, and manipulate data in templates, making it easier to present data to users in a desired format.

» What are Pipes in Angular? Explain Pure and Impure Pipes? How would you create custom pipe?

Pipes are used to transform data, when we only need that data transformed in template. It is similar to what filters in AngularJS.

We use a pipe with the | syntax in the template, the | character is called the pipe character.

import { Component } from '@angular/core'; @Component({ selector: 'my-app', template: `<div>currency is {{ currency:'USD' }}</div>` }) export class AppComponent { currency = 5500.20; } // output: // currency is USD 5,500.20

We can even chain pipe together, ex:

{{ 5500.20 | currency: 'USD' | lowercase }} // output usd 5,500.20.

Built-in Pipes: There are various built-in pipes available in Angular. Ex. Date, Currency, Decimal, JSON, UpperCase, LowerCase etc.. Full list is here: https://angular.io/api?type=pipe

Custom Pipe: We can create custom pipe using @Pipe decorator and annotate a class like:

import { Pipe } from '@ angular/ core'; . . @Pipe({ name:"test" }) class TestPipe { } // The name parameter for the Pipe decorator is how the pipe will be called in templates.

Transform function: The actual logic for pipe is put in a function called transform on the class. Example:

import { Pipe, PipeTransform } from '@angular/core'; /* * It returns the split string based on the seperator and index * @Parameters: * @input: input string from pipe * @seperator: separator to split string (string) * @index: to return the index value from an array (number) * * @Usage: * value | splitStr:"seperator":index * * @Example: So if we have a string i.e. Hi_Hello_World and if we want to get only World here then we'll * {{ Hi_Hello_World | splitStr:"_":[2] }} * * @Output: World */ @Pipe({ name: 'splitStr' }) export class SplitPipe implements PipeTransform { transform(input: any, seperator: string, index: number): string { return input.split(seperator)[index]; } }

The first argument to the transform function is the value that is passed into the pipe, i.e. the thing that goes before the | in the expression.

The second parameter to the transform function is the first param we pass into our pipe, i.e. the thing that goes after the : in the expression.

Pure Pipe: A pure pipe is only called when Angular detects a change in the value or the parameters passed to a pipe. For example, any changes to a primitive input value (String, Number, Boolean, Symbol) or a changed object reference (Date, Array, Function, Object).

Impure Pipe: An impure pipe is called for every change detection cycle no matter whether the value or parameters changes. i.e, An impure pipe is called often, as often as every keystroke or mouse-move

» What is the difference between pipe and filter in Angular?

Pipe: In Angular, a "pipe" is a feature that allows you to transform data in a template before displaying it to the user. Pipes are used within template expressions (in curly braces {{ }}) to apply transformations to values. Angular provides several built-in pipes for common tasks like formatting dates, numbers, and currency, as well as for filtering and sorting arrays. You can also create custom pipes to perform specific transformations on data.

Filter: "Filter" is a term that is often used to refer to a specific type of pipe used for filtering arrays of data. In Angular, the filter pipe allows you to filter an array based on specific criteria and display only the items that meet the criteria. The filter pipe is commonly used with the *ngFor directive in Angular templates to display a subset of items from an array. While "filter" can refer specifically to the filter pipe, it can also be used more broadly to describe any type of data transformation or manipulation.

In summary, while "pipe" is a more general term in Angular that refers to a feature for transforming data in templates, "filter" typically refers to a specific type of pipe used for filtering arrays of data. However, the two terms are related, as the filter pipe is a specific type of pipe used for data transformation.

» What is async pipe? What is the purpose of async pipe? Explain with simple example

The async pipe is a built-in Angular pipe that is used to automatically subscribe to an Observable or Promise and unwrap the data it emits. It simplifies the process of working with asynchronous data streams in Angular templates by handling subscriptions and unsubscriptions automatically.

The purpose of the async pipe is to avoid managing subscriptions manually in component classes and templates, reducing the risk of memory leaks and improving code readability.

Here's a simple example to demonstrate the usage of the async pipe:

Suppose you have a component that retrieves user data asynchronously from a service and exposes it as an Observable:

import { Component } from '@angular/core'; import { Observable } from 'rxjs'; import { UserService } from './user.service'; @Component({ selector: 'app-user', template: ` <div *ngIf="user$ | async as user"> <h2>Welcome, {{ user.name }}</h2> <p>Email: {{ user.email }}</p> </div> ` }) export class UserComponent { user$: Observable<any>; constructor(private userService: UserService) { this.user$ = this.userService.getUser(); } }

In this example:

- - The `user$` property is an Observable that emits user data.

- - The `UserService` is responsible for fetching user data asynchronously.

- - The async pipe (`*ngIf="user$ | async as user"`) subscribes to the `user$` Observable and unwraps the emitted user data into the `user` variable.

- - Inside the `div`, we can access properties of the `user` object directly in the template.

By using the async pipe, we eliminate the need to manually subscribe to the Observable in the component class and handle the subscription lifecycle. The async pipe takes care of subscribing to and unsubscribing from the Observable automatically, ensuring clean-up and preventing memory leaks.

» What is Polyfills.ts in Angular?

polyfills.ts is a file in an Angular project that contains polyfills, which are code snippets that provide modern JavaScript features to older browsers that do not support them natively. Polyfills bridge the gap between the features supported by modern browsers and those supported by older browsers, ensuring that an Angular application can run consistently across different browsers and environments.

The polyfills.ts file typically includes polyfills for various features such as:

ES6/ES7 features: Polyfills for features introduced in ECMAScript 2015 (ES6) and later versions, such as Promise, Array.prototype.includes, Array.from, Object.assign, etc.

Web standards: Polyfills for web standards and APIs that are not fully supported in all browsers, such as fetch for making network requests, IntersectionObserver for observing changes to the intersection of elements with the viewport, Intl for internationalization and localization, etc.

Browser APIs: Polyfills for browser-specific APIs or features, such as EventTarget, Element.prototype.classList, Element.prototype.closest, etc.

By including these polyfills in the polyfills.ts file, an Angular application can ensure consistent behavior and functionality across different browsers, enabling developers to write modern JavaScript code without worrying about compatibility issues with older browsers.

Here's an example of what a typical polyfills.ts file might look like:

// Polyfills for core-js features import 'core-js/es6/array'; import 'core-js/es6/object'; import 'core-js/es7/reflect'; import 'core-js/es6/promise'; // Polyfills for web APIs import 'zone.js/dist/zone'; // Included for Angular's zone.js // Other polyfills as needed

These polyfills are typically installed and managed via dependencies such as core-js, zone.js, and other polyfill libraries. They are loaded and executed at the beginning of the application to ensure that the necessary features are available before any other code is executed.

» What is NgZone?

NgZone is a core Angular service that provides a way to execute code outside of Angular's change detection zone or to explicitly run code within Angular's zone. Angular's change detection mechanism runs inside a zone, which is a context where Angular tracks and manages asynchronous operations such as event handling, HTTP requests, timers, and so on.

The primary purpose of NgZone is to facilitate better integration with non-Angular code and to manage asynchronous tasks that occur outside of Angular's zone. It allows you to explicitly control when Angular's change detection runs and when it does not, which can be useful for optimizing performance and avoiding unnecessary change detection cycles.

Some key features and uses of NgZone include:

- Running code outside Angular's zone: You can use NgZone.runOutsideAngular() method to execute code that doesn't need to trigger change detection. This can be useful for performance-critical tasks or for integrating with third-party libraries that are not zone-aware.

- Triggering change detection manually: You can use NgZone.run() method to explicitly trigger change detection after performing certain asynchronous operations. This ensures that any changes made within the operation are reflected in the UI.

- Monitoring zone activity: You can subscribe to onStable and onUnstable events to be notified when Angular's zone becomes stable or unstable, respectively. This can be useful for debugging or monitoring application performance.

Here's a simple example demonstrating the use of NgZone:

import { Component, NgZone } from '@angular/core'; @Component({ selector: 'app-root', template: ` <button (click)="runOutsideZone()">Run Outside Zone</button> ` }) export class AppComponent { constructor(private ngZone: NgZone) {} runOutsideZone() { this.ngZone.runOutsideAngular(() => { // Execute code outside Angular's zone setTimeout(() => { console.log('Timeout executed outside zone'); // Manually trigger change detection this.ngZone.run(() => { console.log('Change detection triggered'); }); }, 1000); }); } }

In this example, clicking the button triggers the runOutsideZone() method, which executes a setTimeout callback outside Angular's zone using NgZone.runOutsideAngular(). Inside the callback, another NgZone.run() call is used to manually trigger change detection and update the UI. This demonstrates how NgZone can be used to manage asynchronous tasks and control change detection in an Angular application.

» What is EventEmitter?

In Parent-Child component, if we want to send some data from child component to parent component we use the @output decorator with EventEmitter to create a custom event. EventEmitters are RxJS Subjects behind the scenes.

» Dependancies vs DevDependencies

The difference between these two, is that devDependencies are modules which are only required during development, while dependencies are modules which are also required at runtime.

To save a dependency as a devDependency on installation we need to do an npm install --save-dev, instead of just an npm install --save. A nice shorthand for installing a devDependency that I like to use is npm i -D. The shorthand for saving a regular dependency is -S instead of -D

» Explain Token Based Authentication

The Token-based authentication has received expansion over last few years due to RESTful Web APIs, SPA and so on. The Token based authentication is stateless.

Stateless means every transaction is performed as if it was being done for the very first time and there is no previously stored information used for the current transaction.

Token Based Authentication steps - A user enters their login credentials and the server verifies the entered credentials. Validating to the entered credentials, It’s correct or not. If the credentials are correct, returns a signed token. This token is stored in local storage on the client side. We can also store in session storage or cookie.

» Error logging and caching in Angular

The default implementation of ErrorHandler log error messages, status, and stack to the console. To intercept error handling, we write a custom exception handler that replaces this default as appropriate for your app.

import { Injectable, ErrorHandler } from '@angular/core'; import {ErrorLoggService} from './error-logg.service'; // Global error handler for logging errors @Injectable() export class GlobalErrorHandler extends ErrorHandler { constructor(private errorLogService: ErrorLoggService) { //Angular provides a hook for centralized exception handling. //constructor ErrorHandler(): ErrorHandler super(); } handleError(error) : void { this.errorLogService.logError(error); } }

» ForRoot vs ForChild

- ForRoot: Use in root modules like app.modules. It creates a module that contains all the other directives, given routes and other router service itself.

- ForChild: Use in child modules. It creates a module that contains all the other directives, given routes but does not include router service.

» What is resolver in Angular

A resolver is a service that pre-fetches data required for a route before the route is activated and the component is rendered.

Resolvers are typically used to ensure that the data required by a component is available before the component is displayed to the user.

Resolvers are implemented as Angular route guards and are specified in the route configuration.

Resolvers are ideal for fetching data from APIs or performing other asynchronous tasks before routing to a specific component.

import { Injectable } from '@angular/core'; import { Resolve } from '@angular/router'; import { Observable } from 'rxjs'; import { DataService } from './data.service'; @Injectable({ providedIn: 'root' }) export class DataResolver implements Resolve<any> { constructor(private dataService: DataService) {} resolve(): Observable<any> { return this.dataService.getData(); } }

Usage in route configuration:

const routes: Routes = [ { path: 'example', component: ExampleComponent, resolve: { data: DataResolver } } ];

» What is ng-template

ng-template is an Angular element which we used with structural directives like *ngIf, *ngFor, *ngSwitch to display content based on the condition. You can say ng-template is kind of virtual element which only displays when some condition is true.

Here's the in depth article about ng-template

<div *ngIf="isVisible; then showTemplate1 else showTemplate2"></div> <ng-template #showTemplate1>Template 1</ng-template> <ng-template #showTemplate2>Template 2</ng-template>

» What is TemplateRef and ViewContainerRef

- TemplateRef is a class.

- Any structural directive access the content of

element through TemplateRef class. - ViewContainerRef as the name says, is the container of a view.

- As per angular.io it represents a container where one or more views can be attached to a component.

TemplateRef and ViewContainerRef are two important concepts in Angular that are used to work with dynamic templates and dynamically render components.

TemplateRef:

TemplateRef represents an Angular template that can be instantiated to create views.

It provides a reference to the template's content, which can include HTML and Angular directives.

TemplateRef is typically used in conjunction with structural directives like *ngFor and *ngIf to dynamically render content based on data or conditions.

Example:

import { Component, ViewChild, TemplateRef } from '@angular/core'; @Component({ selector: 'app-example', template: ` <ng-container *ngTemplateOutlet="myTemplate"></ng-container> ` }) export class ExampleComponent { @ViewChild('myTemplate') myTemplate: TemplateRef<any>; name = 'John'; }

<ng-template #myTemplate> <p>Hello, {{ name }}</p> </ng-template>

ViewContainerRef:

ViewContainerRef represents a container where one or more views can be attached or removed dynamically.

It provides methods for creating, inserting, and removing views within the container.

ViewContainerRef is used in conjunction with TemplateRef to create dynamic components or render dynamic content.

Example:

import { Component, ViewChild, TemplateRef, ViewContainerRef } from '@angular/core'; @Component({ selector: 'app-example', template: ` <ng-template #myTemplate> <p>Hello, {{ name }}</p> </ng-template> <ng-container #container></ng-container> ` }) export class ExampleComponent { @ViewChild('myTemplate') myTemplate: TemplateRef<any>; @ViewChild('container', { read: ViewContainerRef }) container: ViewContainerRef; name = 'John'; constructor() {} ngOnInit() { // Create and attach the view to the container const view = this.container.createEmbeddedView(this.myTemplate, { $implicit: this.name }); } }

In the above examples, TemplateRef represents a template with dynamic content, while ViewContainerRef is used to create a container where the template can be dynamically rendered. The createEmbeddedView() method of ViewContainerRef is used to instantiate the template and attach it to the container. This allows for the dynamic creation and rendering of content within an Angular application.

» Structural Directives Vs Attribute Directives

- Structural Directives are responsible for HTML layout. They shape or reshape the DOM structure by adding, removing or manipulating element.

- Ex. *ngIf, *ngFor, *ngSwitch

- The asterisk (*) states it is a structural directives.

- Attribute Directives just changes the behaviour and appearance of element.

- Ex. ngClass, ngStyle

» Custom Directives

Creating a custom directive is same like creating a component. You just need to use @directive instead of @component decorator.

» Angular's Dependency Injection

Dependency injection is a design pattern that allows components and services to be easily shared and reused across an application. In Angular, dependency injection is a core feature that helps manage dependencies between different parts of the application.

At a high level, dependency injection works by allowing a component or service to declare its dependencies in its constructor. When the component or service is created, Angular automatically resolves these dependencies and provides them to the component or service. This makes it easy to swap out components and services without having to modify the code that uses them.

Here's an example of how dependency injection works in Angular:

Suppose you have an Angular application that displays a list of customers. The application has a customer service that provides data about the customers, and a customer component that displays the list of customers.

The customer component needs to get data from the customer service to display the list of customers. Instead of creating an instance of the customer service inside the component, you can use dependency injection to inject the customer service into the component.

Here's how you might declare the customer service as a dependency in the customer component's constructor:

import { Component } from '@angular/core'; import { CustomerService } from './customer.service'; @Component({ selector: 'app-customer', templateUrl: './customer.component.html' }) export class CustomerComponent { constructor(private customerService: CustomerService) {} }

In this example, the customer component declares a dependency on the CustomerService by including it as a parameter in the constructor. The private keyword tells Angular to create a private instance of the customer service and inject it into the component.

Now, when the customer component is created, Angular automatically resolves the CustomerService dependency and provides an instance of the service to the component. This allows the component to use the customer service to get data about the customers, without having to create an instance of the service itself.

Overall, dependency injection is a powerful and flexible way to manage dependencies between different parts of an Angular application. It allows components and services to be easily shared and reused, making it easier to build and maintain large, complex applications.

» Hierachical Injector

Angular Dependency Injector is actually a hierachical injector means that if we provide a service in some place of our application ex. UserComponentthe Angular knows how to create instance of service for UserComponent and for all its child components. So which means UserComponent and its child components and the child component of child component will receive the same instance of the service.

We can also provide service to other places. Ex:

- AppModule: If we provide service in

AppModulethen same instance of the class of the service is available in entire application i.e. in all components, all directives and in all other services where we inject service. - AppComponent: Same instance of service is available for all Components including its children but not for other services.

- Any other component: Same instance of the Service is available for the Component and all its child components.

Note: Instances don't propogate up they only go down of the components tree. Ex. Lets say we have one, two and three components and we inject service to component two then only component three can receive the instance and not the component one.

» What is TypeScript?

- TypeScript is a superset of JavaScript.

- Unlike JavaScript (which is dynamically typed language) TypeScript is a static typed language.

- What TypeScript does is just adds some extra feature on top of JavaScript.

- Compiler of TypeScript written in TypeScript itself and it compiles TypeScript code into JavaScript so that browser can understand.

» Data Types in TypeScript

Following are some main data types in typescript:

// String let myString: string = "Programming World"; // with string interpolation let myText: string = `Welcome to my blog`; --------------------------- // Number let myNumber: number = 100; --------------------------- // Boolean let myBoolean: boolean = true; --------------------------- // Array let sport: string[] = ['cricket', 'football', 'tennis']; let sport1: Array<string> = ['badminton', 'hockey', 'rugby']; let numArr: number[] = [100, 200, 300]; let numArr1: Array<number> = [100, 200, 300]; --------------------------- // Object let person: object = { name: 'John', age: 40 }; --------------------------- // Tuple // It allows us to express an array with known but not same types. // declaration let myTupleArr: [string, number]; // correct initialisation myTupleArr = ["ProgrammingWorld", 100]; // incorrect initialisation myTupleArr = [100, "ProgrammingWorld"]; // Accessing console.log(myTupleArr[0]); console.log(myTupleArr[1]); // while accessing you have to sure following: console.log( myTupleArr[0].substring(1)); // correct console.log( myTupleArr[1].substring(1)); // error, 'number' does not have 'substring' --------------------------- // null let noVal: null = null; --------------------------- // undefined let nothing: undefined = undefined;

» Dynamically Typed Language vs Static Typed Language

- In Static Typed Language you have to define type of variable by declaring it explicitly before using them. While in Dynamic Typed Language dosen’t have this contraint.

- In Static Typed Language type checking is done during compile time while in Dynamic Typed Language it is done during runtime.

- Static Typed Language makes your development slower as you need to take care of types of variable and other related things. While in Dynamic Typed Language you don’t required all of these hence development is bit faster as compared to Static Typed Language.

- Install TypeScript:

npm install -g typescript// g for global- type command

tscto check version of TypeScript

» What is ViewEncapsulation in Angular

Before understading the ViewEncapsulation in Angular we need to first know about Shadow DOM.

Angular is based on the Web Components and Shadow DOM is one the core feature of it which enables DOM tree and style encapsulation.

Shadow DOM lets us include styles in Web Components without letting them leak outside the component's scope. Angular also provide this feature for Components and we can manage it through encapsulation property.

There are 3 ViewEncapsulation types in Angular.

- ViewEncapsulation.Emulated : The default behaviour of Angular's encapsulation. In this mode Angular changes generic CSS class selector to one that targets just a single component type by generating some auto attributes. So any style we define in one component don't leak out to the rest of the application and but our component still can inherit global styles.

- ViewEncapsulation.Native/ShadowDom: In this case if we applied styles to our component then don't leak outside of that component's scope but our component cannot inherit global styles and we need to define all the required global styles on our component's decorator. ViewEncapsulation.Native requires a feature called the shadow DOM which is not supported by all browsers.

- ViewEncapsulation.None: Styles defined in a component will get applied to all the components of the application, in this case angular will not attach 'component-specific-attributes' to the elements of component.

More detailed tutorials on this:

Shadow DOM strategies-in-angular

Scoping Your Styles in Angular With ViewEncapsulation

» What is @ViewChild

Using @ViewChild decorator you can directly select an element from component's template.

Example:

// app component import { Component, ViewChild } from "@angular/core"; @Component({ selector: "my-app", templateUrl: "./app.component.html", styleUrls: ["./app.component.css"] }) export class AppComponent { name = "Angular"; @ViewChild("myname", { static: false }) myName; handler() { console.log(this.myName); } } // app component template (app.component.html) <input #myname (keypress)="handler()">

Here, we have an input type text with reference variable #myName. We are calling a method on keypress for input element and in the componen'ts handler method getting the reference of it using @ViewChild decorator which is declared as @ViewChild("myname", { static: false }) myName;

Demo: https://stackblitz.com/edit/angular-6gwwea?file=src%2Fapp%2Fapp.component.ts

» What is @NgModule?

An NgModule is a class marked by the @NgModule decorator. @NgModule takes a metadata object that describes components, pipes, directives, services etc. In simple words @NgModule is to declare each thing you create in Angular and group them together.

Following are the main parts of NgModule's metadata object:

- declarations: It is used to declare components, directives, pipes that belongs to the current module. Once declared here you can use them throughout the whole module. Everything inside declarations knows each other.

- exports: If you want to expose your module's component, directive or pipes to other modules available in the application then you can declare it in exports property.

- imports: It is used to import supporting modules likes FormsModule, RouterModule, CommonModule, or any other custom-made feature module.

- providers: It is used to inject the services required by the components, directives, pipes in our module.

- bootstrap: To bootstrap/launch the component, the AppComponent is by default.